NVIDIA has launched its next-generation AI computing platform, “Vera Rubin,” at the ongoing CES 2026 in Las Vegas. During the announcement, Jensen Huang said the platform is now in full production. According to the company, the main goal of Vera Rubin is to make AI computing cheaper while also speeding up how fast models are trained and deployed.

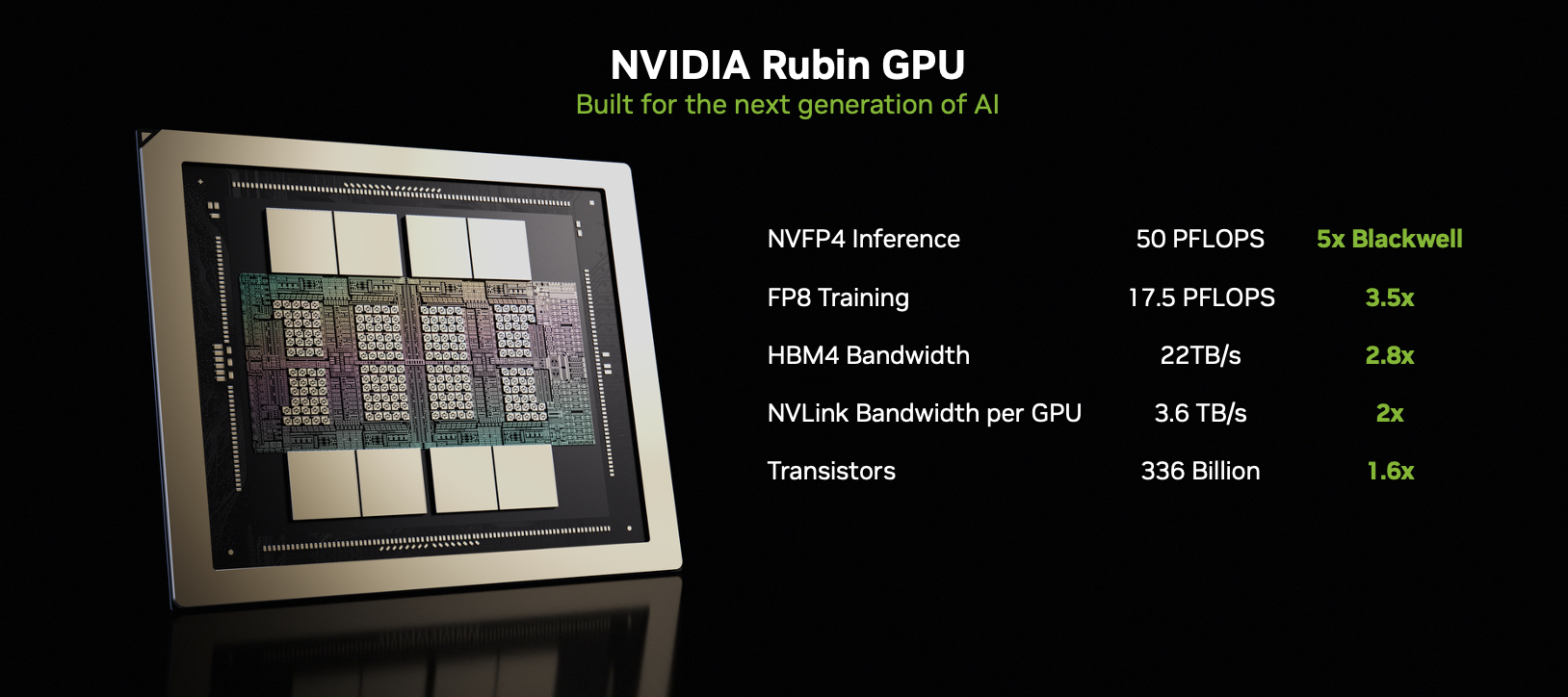

The platform is built for large-scale AI workloads and is obviously aimed at data centres, cloud providers, and enterprises working on advanced AI systems. NVIDIA positions it as a direct successor to its Blackwell architecture, which currently powers many high-end AI data centres. With Rubin, the company is trying to push AI infrastructure. Here, the timing is interesting as the current cost of running and scaling AI models is becoming a major concern for the industry.

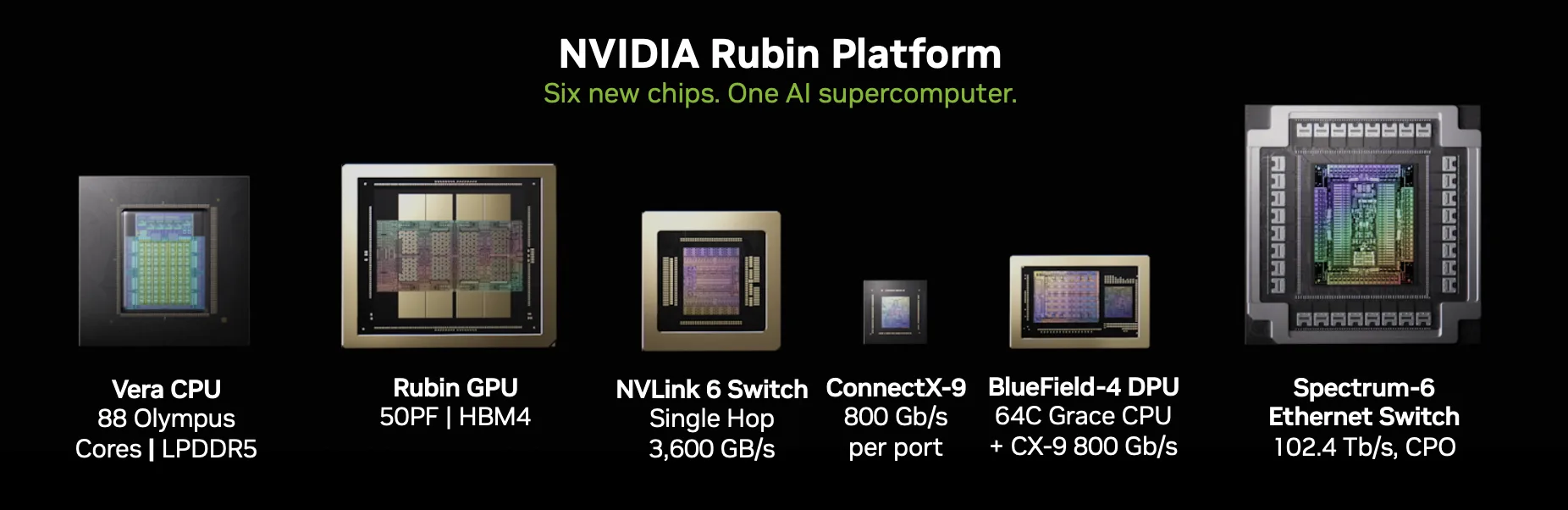

One of the biggest changes with Rubin is in its design. NVIDIA says that this is its first six-chip AI platform developed using what it calls an “extreme codesign” approach. Where instead of treating each component separately, the company designed the GPU, CPU, networking, and data processing units together.

The platform includes Rubin GPUs, Vera CPUs, NVLink 6, Spectrum-X Ethernet Photonics, ConnectX-9 networking cards, and BlueField-4 data processing units. This design is said to reduce system bottlenecks and improve performance for large AI workloads running across racks.

At the heart of the platform is the Rubin GPU, which delivers up to 50 petaflops of inference performance using NVFP4 precision. The Vera CPU is focused on handling data movement and AI agent processing tasks, which NVIDIA says is becoming increasingly important as models move beyond simple inference and into more autonomous, agent-based systems. The company also says Rubin supports large-scale training and inference more efficiently than previous platforms.

Interestingly, the company is making strong claims around cost as well. According to NVIDIA, Rubin can reduce the cost of generating AI tokens to around one-tenth compared to earlier platforms. Alongside this, the chip giant introduced a new AI-focused storage system called Inference Context Memory Storage, which is designed to improve long-context AI processing by increasing speed and efficiency.

The idea here is to better support models that need to process very large amounts of data over longer contexts without driving up infrastructure costs.

From a broader perspective, NVIDIA says the Rubin platform will be used alongside its open AI models and software tools to support applications in areas such as autonomous driving, robotics, healthcare, and climate research.

The announcement also started discussion on social media. X user Sawyer Merritt shared a video of Rubin chips from the CES stage. Responding to the post, Elon Musk said it would take another nine months or so before the hardware is operational at scale and the software works well.

Personally, I think platforms like Vera Rubin matter less for their raw performance numbers and more for what they do to AI economics. If the claims around lower token costs and better efficiency hold up, it could make advanced AI systems more accessible to companies that currently struggle with infrastructure expenses.

Apart from that, cheaper AI computing could also mean faster experimentation and wider deployment, instead of AI being limited to a small group of well-funded players.

I also feel that end users could benefit indirectly if AI computing costs continue to come down. Lower infrastructure costs usually translate into cheaper cloud services, which could affect everything from chatbots and image generation tools to enterprise AI software. Over time, this could also help push more capable AI features into everyday products.

That said, it is still early. Vera Rubin is a major architectural shift, but real-world impact will depend on how quickly it scales, how stable the software stack becomes, and how widely partners adopt it. For now, NVIDIA has made its intent clear: the future of AI computing, at least in its view, has to be faster, more efficient, and cheaper than what came before.