Smart glasses have come a long way since their early days, from around 2013. Today, the AI Glasses are the most talked-about category in consumer tech.

At first glance, they look like normal glasses, but inside them sits a mix of sensors, microphones, displays, and artificial intelligence. It allows you to have some quick information without pulling out the phone. Many people are so optimistic about these glasses that they claim that AI Glasses will replace smartphones altogether.

That’s why in this article I decided to talk about what AI Glasses are, how they work, its use cases, future, and more. So without wasting any more time, let’s get started.

What Are AI Glasses?

AI Glasses are nothing but regular eyeglasses with artificial intelligence features built directly into the frames. They give hands-free assistance by using voice, audio, sensors, and sometimes a small display inside the lens. The primary goal of these glasses is to provide information without needing a phone in your hand.

The modern AI Glasses have evolved from earlier smart glasses that focused heavily on visual AR overlays. As these glasses now focus more on practical, everyday AI assistance.

How AI Glasses Work?

AI glasses work through a simple system that includes input, processing, and output. The input comes from microphones, sensors, and in some models, cameras that capture what the wearer sees or asks for. These devices often include motion sensors like IMUs to track small head movements as well.

Once the glasses get input, the data is processed by a mix of on‑device computing and cloud‑based AI. Where simple tasks, like recognizing a command, can be handled on the device. But more complex tasks, like understanding or identifying what is in front of you, are sent to cloud AI for deeper analysis. As the on-device AI is still not capable enough to handle more complex tasks.

After that, the output is delivered to the user. Some glasses respond via speakers built into the frame of the glasses, while others use a small display that projects information onto the lens.

For example, the Meta Ray‑Ban Display glasses include a tiny screen that shows apps, media, and notifications right in your line of sight.

What’s the Difference Between AI Glasses and AR Glasses?

| Category | AI Glasses | AR Glasses |

| Main Purpose | Provide hands‑free AI assistance like voice answers, translation, scene understanding, and simple visual cues. | Overlay digital 3D graphics and virtual objects onto the real world for immersive interactions. |

| Display | Often no display or only minimal HUD‑style text and icons; many models are audio‑first. | Full visual overlays using waveguides or optical engines to place digital content into your field of view. |

| Design | Lightweight and designed to look like regular eyeglasses for everyday wear. | Bulkier because of optics, projection systems, and sensors needed for AR graphics. |

| Key Technologies | Voice assistants, AI models, object recognition, translation, audio output. | Spatial mapping, 3D rendering, environmental tracking, hand/eye tracking. |

| Use Cases | Everyday tasks like quick answers, travel help, notifications, capturing content. | Immersive tasks like training, gaming, industrial workflows, and real‑time spatial instructions. |

| Consumer Adoption | Growing quickly because they look normal and offer practical AI features. | Still limited in the consumer market due to bulk, cost, and use‑case complexity. |

| Overall Focus | Intelligence and assistance. | Graphics and augmented visuals. |

Key Features and Use Cases of AI Glasses

Now you might think that, since AI Glasses are in its early stages, the features and use cases might be limited. But that’s not true! For example, these glasses can handle real‑time translation of conversations, which comes really handy while traveling or working in multilingual environments. Some can help identify objects or understand scenes when the glasses include a camera.

Apart from that, AI Glasses can provide navigation help, answer questions, update you on the weather, or help you remember things like where you parked your car, and more. Some models include basic apps, photography, audio playback, or ways to post content directly to social media.

Power and Battery

Since there is not much real estate on the glasses, the batteries are hidden in the arms of the frames. They rely on low‑power sensors and optimized chips to keep battery consumption down. Plus, the tasks that require more processing are sent to the cloud to reduce load on the glasses themselves. Besides, the AI Glasses charge through a regular USB‑C or a charging case, similar to other wearables.

Privacy Considerations

Privacy is a hot topic when it comes to the AI Glasses, as they are packed with cameras and sensors. That’s the reason many models avoid having cameras altogether to reduce concerns, as seen with the Even G1, which only uses audio and motion data.

However, camera‑enabled models include visible indicators so people know when recording is active. It’s like that green dot on your iPhone when the camera is being used. Apart from that, since many glasses rely on cloud processing, companies have to manage how data is sent and stored. As a result, the difference between the privacy concerns around Cloud AI vs On-Device AI is persistent here as well.

AI Glasses Market

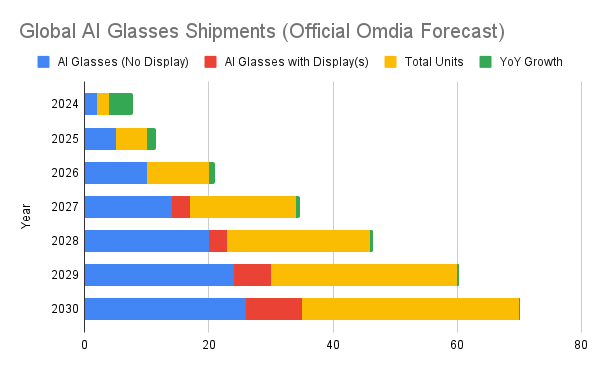

The AI glasses market is growing fast, with Omdia reporting that global shipments will surpass 10 million units in 2026 as major players like Google and Xiaomi ramp up their involvement. And looking ahead, shipments could reach 35 million units by 2030, showing just how quickly this new category is taking off.

Integrating intelligence into everyday glasses has the potential to transform the way we experience daily life. Unlike wrist-worn wearable band devices, AI glasses encounter challenges such as privacy concerns from built-in cameras and microphones, as well as social resistance to all-day wear. These factors may hinder widespread adoption beyond early enthusiasts in the short term.

Jason Low, Omdia Research Director

Future of AI Eyewear

The future of AI hardware is starting to look quite promising compared to what most people expected a few years ago. Instead of bulky AR headsets or mixed reality goggles, the focus is clearly shifting toward AI glasses that look like normal eyewear. Many people in the industry believe this could be the next big platform in personal computing, and some even think it could slowly replace the smartphone.

If we go by the reports from Forbes, companies like Meta and EssilorLuxottica are investing heavily in this idea. At the same time, consumer interest in AR headsets has cooled off, too. While there are some industries where AR glasses are in use, it hasn’t clicked with everyday users the way many companies hoped.

What’s really pushing AI Glasses forward now is that they finally feel practical. Earlier attempts, like Google Glass, failed largely because they were bulky, awkward, or too attention-grabbing. That’s changing.

Today’s AI glasses pack useful features into frames that look normal, and that has made a huge difference. The strong demand for Ray-Ban Meta smart glasses, especially among creators and travelers, shows there’s real interest in hands-free tools that actually help in daily life. Besides, AI itself is also more capable now, handling things like object recognition, translation, and quick summaries in real time.

So whether these glasses will fully replace smartphones or not is still debatable, and only time will show the reality. But for now, the trend is clear. AI Glasses are seen as the most promising direction in personal computing. And many people, including me, are optimistic that these glasses could become an important part of how we interact with technology in the years ahead.