These days, the term AI has been slapped on almost every product out there, whether it’s software or hardware. Your phone has AI, your PC has AI. Even your search engine is basically a conversation with a robot. It will not be wrong to say that AI is a revolution happening right now, but have you ever thought about what actually runs this revolution? It’s not magic. It’s silicon.

For years, we had only two types of processing units. It was the CPU, which we know as the central processing unit, and the GPU, which we know as the graphics processing unit. However, now there is a new name in the market called NPU, which is a neural processing unit. Lately, all these terms have become quite popular, thanks to the rise of AI. So if you are trying to figure out which chip amongst these is actually powering the AI, then the shortest answer would be GPU, but the real answer is more interesting. So let’s understand each of these processing units in detail to figure out which unit powers which type of AI.

CPU

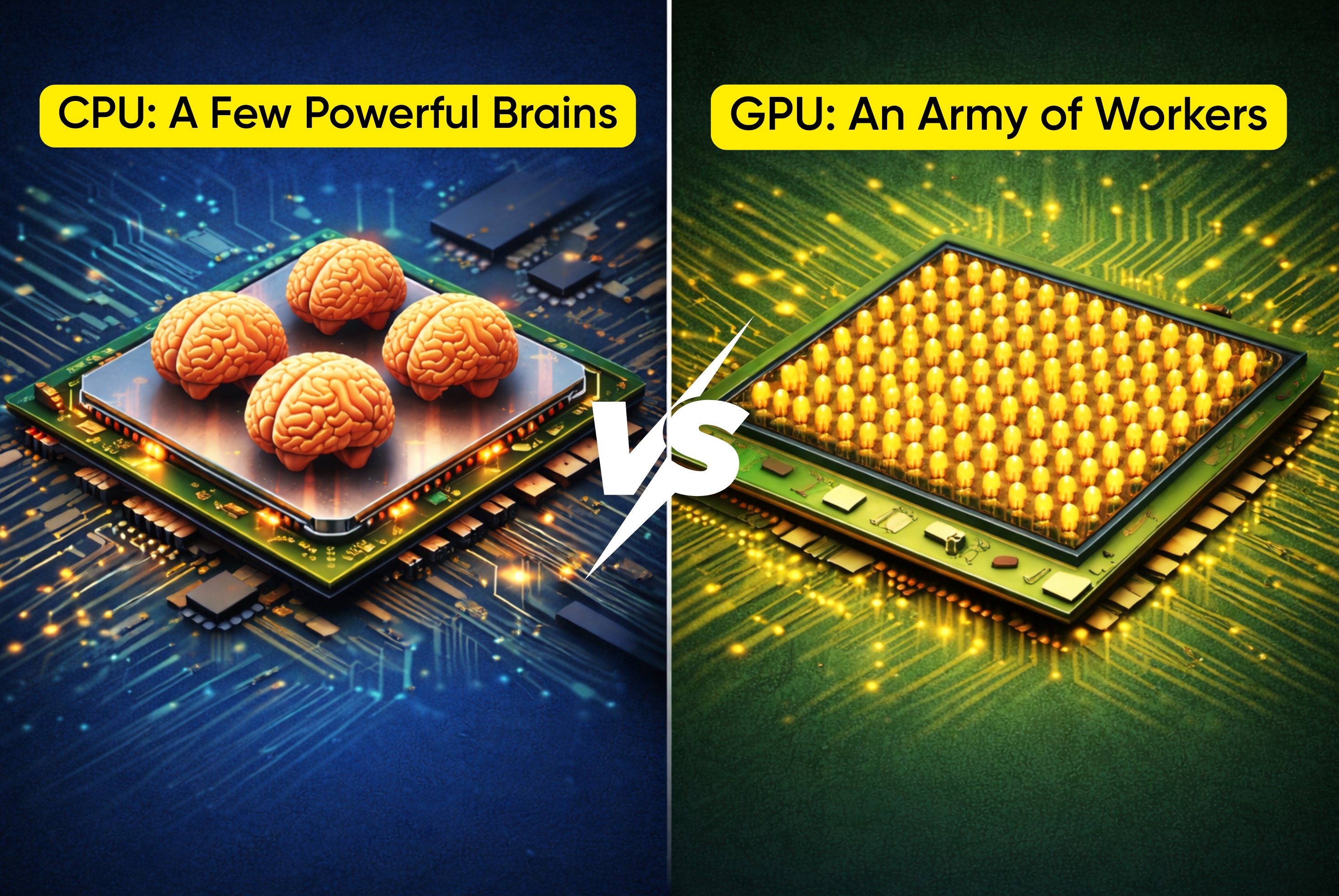

The CPU is the original brain of your computer. It is designed for flexibility and is the master of all trades. You can think of it like a head chef at a restaurant who can cook any dish you ask for by following the recipe step-by-step with incredible precision.

But the real question is, how does it handle AI? So when the CPU handles any AI task, it treats it like any other job, which is a long list of instructions. That makes the CPU a reliable partner, but it processes things sequentially, one or two at a time. This is not ideal for modern day AI requirements as they need billions of calculations. As a result, the CPU becomes painfully slow and inefficient.

It’s like asking that 1 brilliant chef to personally cook 1000 burgers for a Stadium crowd. They can do it, but it will take forever, and they will get burned out.

However, it’s not like CPU is completely irrelevant when it comes to AI as it’s still the boss. It runs your operating system, manages your files and tells other more specialised chips what to do. Which means the CPU is the manager and not the workforce.

GPU

The GPU was originally never meant for AI. It was built for only one thing which is rendering graphics for video games. To make a character, hair, realistically, or create life-like explosions, a cheap needs to perform thousands of small identical calculations at the same time.

But it turns out that training a large AI model like the one behind ChatGPT, Gemini, or Midjourney also requires the same kind of maths, which is billions of simple calculations done in parallel.

So when it comes to how GPU handles AI, it’s more like a massive factory assembly line with thousands of specialised workers. Where each worker does one simple task, but together they assemble something complex at incredible speed. And this same parallel processing power made GPU the undisputed Kings of AI training.

In the GPU space, NVIDIA is the leader today and their GPUs are the engines of all the AI cloud infrastructure out there. When you ask an AI model to write an email or generate an image, that request is likely being processed by a farm of thousands of GPUs in a data centre somewhere.

When it comes to market share, NVIDIA continues to hold over 90% of the AI data center GPU segment, according to recent industry reports from sources like IoT Analytics and Jon Peddie Research. This dominance is driven by the fact that training a single large language model still demands thousands of these GPUs running for weeks, with electricity costs alone reaching millions of dollars.

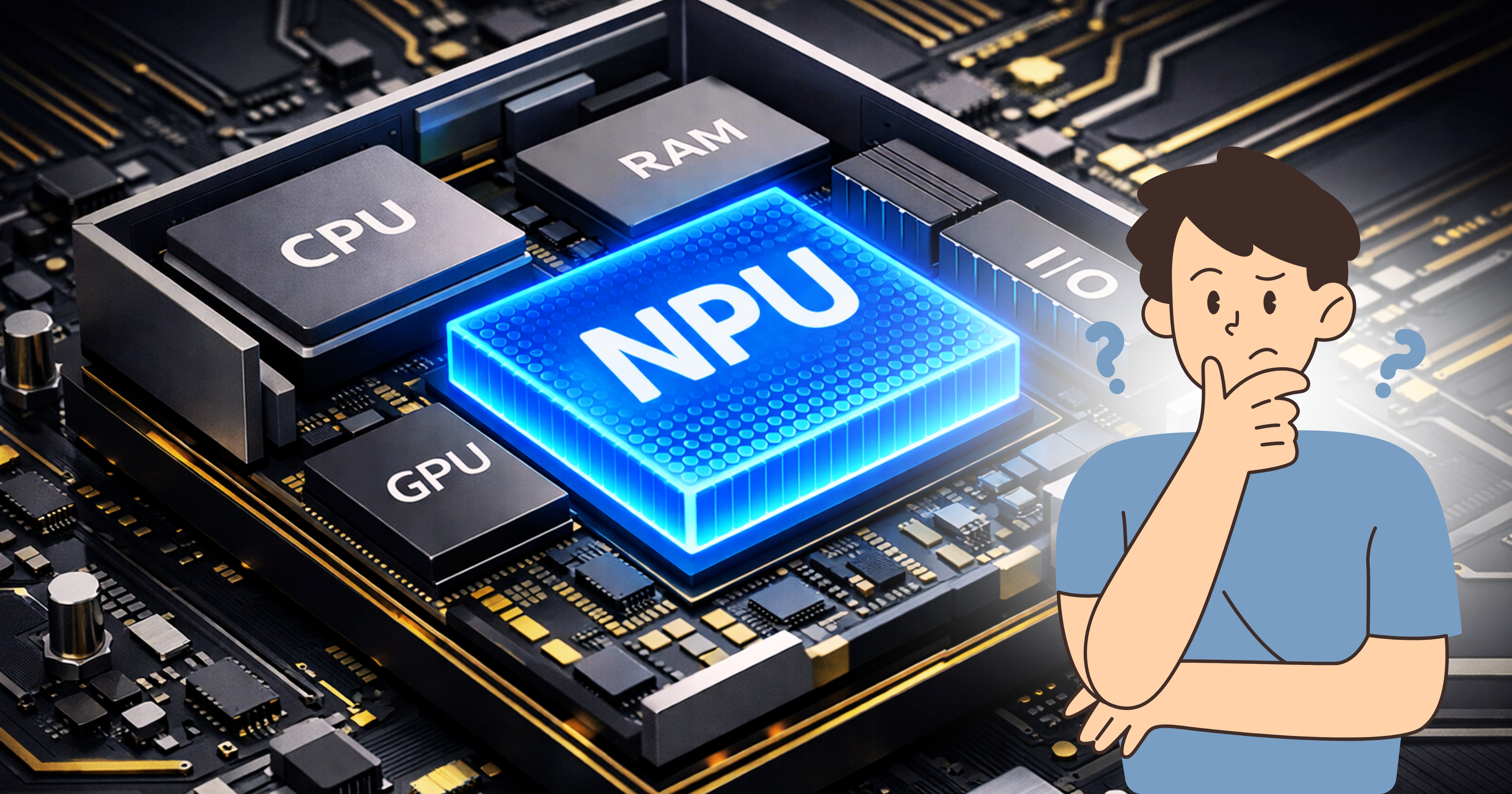

NPU

The NPU is the latest player in the game, and it’s built for one purpose: to run AI tasks efficiently. So if the CPU is the coordinator and the GPU is a huge factory, the NPU is a small, ultra-focused robot that does one specific task extremely well, with almost no wasted energy.

The main job of NPU is interface which means running an AI model that has already been trained.

An NPU’s circuitry is physically designed to perform the exact type of math used in neural networks (matrix multiplication and other operations). It doesn’t need the flexibility of a CPU or the raw power of a GPU. This specialization makes it incredibly fast and sips power, which makes it an ideal processing unit for battery-powered devices like laptops and phones.

NPU is the reason you are able to use AI to edit your photos on your smartphone. So it will not be wrong to say that NPU is the engine of Device AI.

CPU vs GPU vs NPU

| Feature | CPU | GPU | NPU |

|---|---|---|---|

| Best For | General tasks, system control | Training massive AI models | Running AI models on-device |

| Speed | Fast for single tasks | Fastest for parallel tasks | Fastest for sustained AI tasks |

| Power Use | High (for AI) | Extremely High | Extremely Low |

| Location | In every computer | Data centers, high-end PCs | Modern laptops, smartphones |

| Key Players | Intel, AMD | NVIDIA, AMD | Apple, Qualcomm, Intel |

So, Which One Actually Powers AI?

Here’s the simple truth: they all do, but they have different jobs. It’s not a competition amongst processing units, but a collaboration. Think of it like this:

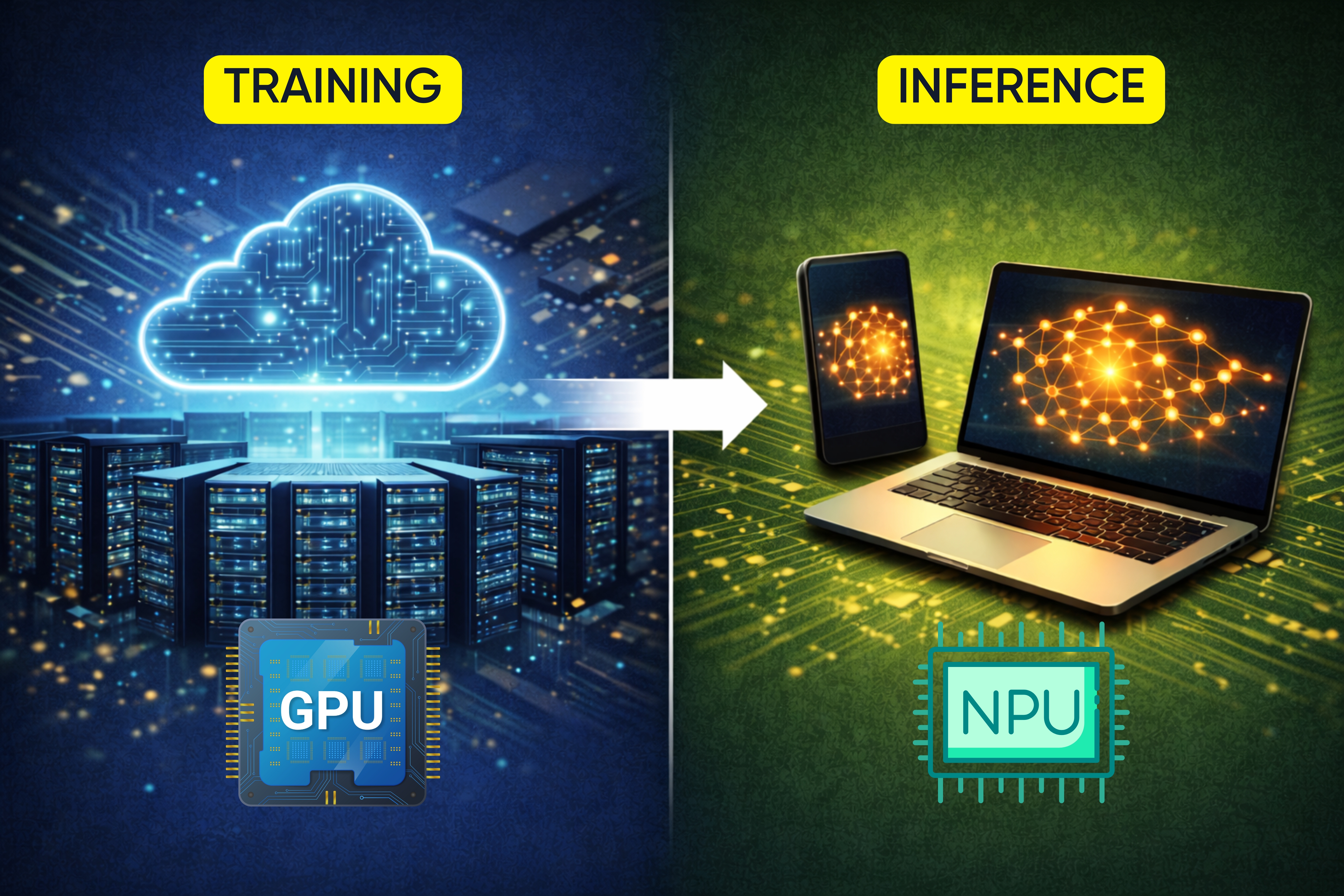

- GPUs in the cloud do the heavy lifting. They spend weeks training a massive AI model.

- That trained model is then optimized and sent to your device.

- On your laptop, the CPU runs Windows or macOS, but when you turn on an AI feature, it hands the job to the NPU.

- The NPU efficiently handles the AI task, like real-time translation, saving battery and freeing up the other chips. If you then ask for a more intense creative task, like AI-generating a video effect, the GPU might kick in to help.

FAQs

Yes, If you want smooth AI features like background effects, voice tools, and AI photo editing without hurting battery life, yes. NPUs are quickly becoming standard.

Both. GPUs handle heavy AI work, CPUs manage the system and lighter tasks. Each plays a different role.

It’s a dedicated chip built to run AI models efficiently. It speeds up on-device AI while using less power. Examples include Apple’s Neural Engine and Qualcomm’s Hexagon NPU.

Not mandatory, but important. Without an NPU, AI tasks rely on the CPU or GPU, which is slower and drains more battery.